The day that artificial intelligence overruns local newspapers, you can say au revoir to civil discussion.

AI assistants like Alexa, Siri, Grammarly, and ChatGPT have become fairly normal fixtures of daily living, and they will only become more so. Now, perhaps sooner than many of us thought, the technology threatens to replace forms of physical expression that make us human, including art and written work.

So let me make our stance clear now: The Byway is meant to be a forum for human discussion, not discussion between AI.

That’s not to say that AI ever will take over the papers. And while it has many advantages, the major issues with the aimless imitator, especially in journalism —- such as its inability to understand slang, localization, or ethics, its creative ideas that aren’t new and its tendency toward bias — have me feeling pretty secure on that front. Of course, that won’t stop it from trying.

But just for fun, if AI did write the newspaper, what would it look like?

Pros of AI

If AI wrote the newspaper, there would be less room for human error, it would write more creatively, and it would be more efficient. All of these things would give it a pretty successful start in the news industry.

I recently read an article about a Panguitch volleyball game based on an impressive amount of statistical data from MaxPreps.

It turned out the article was generated through an AI called infoSentience.

The infoSentience website markets its AI saying it will “avoid typos and data analysis mistakes,” writing that “our AI creates human narratives, without making human mistakes.” Was that AI written? The program can transform large amounts of data into human-sounding articles even including creative phrases, albeit cliches, like describing the game as a “cat-fight” between the two feline mascots.

If AI wrote the newspaper, typos, mixed data points, and other human foibles would not be an issue.

Jaeyeon Chung, an assistant professor of business at Rice University wrote in the Conversation about an experiment on AI’s ability to generate interesting ideas. The goal of her study was to compare the creativity of ideas participants generated alone, with the help of Google, and with the help of ChatGPT.

“Interestingly,” she wrote, “ideas generated with ChatGPT – even without any human modification – scored higher in creativity than those generated with Google.” Chung attributed these findings to Chat GPT’s ability to integrate unrelated information that humans would have to sift through and piece together themselves.

If AI wrote the newspaper, the article you’re reading now might have more creative connections.

People often use AI because they don’t have the time or energy to produce their content themselves. It can search through large amounts of data such as everything that is available on MaxPreps, or in one of the largest and most daunting data pools: the internet.

A person would get tired after clicking through a few websites and making a few different search queries and most likely choose one of the ones most easily accessible at the top of the results. Because Google’s algorithm rewards sites with good credibility by placing them near the top, this often yields good fruit, but not always.

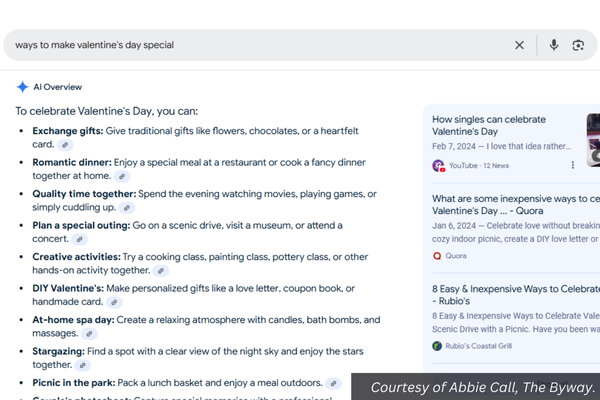

Google’s AI Overview can sift through all the information on the web and find the one with the closest answer to your question without getting tired.

It can also get that information to you immediately — like faster than some websites will load.

When a prominent ecclesiastical leader warned young people about leaning too heavily on AI for spiritual learning, my mother-in-law was confused. She had never even used a smartphone, and she had no idea what an AI assistant was.

To explain this to her, we pulled up ChatGPT and typed into the search bar, “Write me a sacrament meeting talk including quotes from the Book of Mormon and general authorities.” She was shocked when before her eyes, five paragraphs of speech-worthy text trickled down the page in about two seconds. This type of AI response is commonplace to teenagers and young people, but not always to people who were born before the internet existed (dinosaurs, the young people say).

Alexa, Siri, and Google will answer most questions, and if they don’t, ChatGPT or Google’s AI overview probably will.

If AI wrote the newspaper, the time it took to break big news would probably be a lot shorter.

Of course, if AI wrote the newspaper, it would still make errors, even in ways humans don’t.

Cons of AI

No matter how much it progresses, AI will never be human, and it’s still reliant on training material rather than its own intuition for ideas. That comes with some inherent issues.

If AI wrote the newspaper, it would lose track of the evolving language system that is slang, it would be biased, and its ideas, however “creative,” wouldn’t be new.

For all its data analysis and quick responses, AI is notorious for not being able to comprehend slang, especially when a word can only be understood with the help of some cultural knowledge.

This makes sense when you consider that AI works on a set of rules, just like any other kind of code. It can be a very complex set of rules, but rules all the same. Slang changes the rules, so that words can mean the opposite, or be extremely stretched from what they used to be. “Literally” means not literally. “F in the chat” doesn’t mean you failed, nor is it short for a swear word. “Very mindful, very demure” means silly. Believe it or not, AI doesn’t keep up with it any better than your parents do.

It’s the same way with culture. However much training material on Panguitch you fed any AI, do you think it would ever understand the cultural complexities that make you different from any other place? Not yet anyway.

That’s why infoSentience can write an article about a Panguitch volleyball game, but it can’t really grasp all of the meanings of the word “cat-fight,” and it has no background for being able to understand that 1A Panguitch losing in a close game to 3A Richfield is not necessarily a bad thing.

Plus, “ChatGPT doesn’t generate truly novel ideas,” wrote Chung. “It recognizes and combines linguistic patterns from its training data.” I think I found the same cat-fight metaphor in about a dozen different infoSentience articles — still creative, I suppose, but lame once you read it two or three times.

Biases in AI are also difficult to root out. Amazon was reminded of this recently when its voice assistant, Alexa, supposedly a reliable source for U.S. election news, gave supportive answers about voting for Kamala Harris, but refused to answer the same questions about Donald Trump.

On another occasion, the assistant was blasted for calling the 2020 election “stolen” and having fraud.

Whether these responses were intentional or not, (and what researchers have said about AI bias makes me think they’re probably not) they reflected a bias in either the training material or the algorithm Alexa was given to tell people about the election.

Though biases have presented themselves since AI technology first began appearing in the workplace, the problem still hasn’t been fixed, especially when it comes to race and gender bias in hiring and admissions. Chung backed this up in her article about creativity.

In any topic, as long as humans have bias, at least for now, you can bet that AI has it too.

Not Human

So, knowing the pros and cons, if AI wrote the newspaper, would you read it? The typos would disappear, and it would probably still be an enjoyable read, especially if it got to you sooner. But it also wouldn’t know you, it would be biased, and it wouldn’t ever come up with the occasional truly unique idea that makes you think.

Ultimately, an AI-written newspaper is out of the question for The Byway simply because it isn’t human.

Humans create meaning in each other’s lives and grow through shared experience. Humans have stories, feelings, wisdom shaped by experiences, ingenuity, work-ethic, and unique combinations of values.

When it comes to AI writing, I think Rachel Khong wrote my thoughts out well in her Atlantic article: “When we write, we are picking and choosing—consciously or otherwise—what is most substantial to us. Behind human writing is a human being calling for attention and saying, Here is what is important to me.”

The things that are important to you and me are what make the newspaper worth reading. Otherwise, you may pick up the paper one day and be the first human eyes to scan the columns of text in front of you, which is what would happen, if AI wrote the newspaper.

– by Abbie Call

Feature image caption: This robot could help you write your article. But not for this paper!

Read more about what our newspaper will publish on our Call for Papers page.

Abbie Call – Cannonville/Kirksville, Missouri

Abbie Call is a journalist and editor at The Byway. She graduated in 2022 with a bachelor’s degree in editing and publishing from Brigham Young University. Her favorite topics to write about include anything local, Utah’s megadrought, and mental health and meaning in life. In her free time, she enjoys reading, hanging out with family, quilting and hiking.

Find Abbie on Threads @abbieb.call or contact her at abbiecall27@gmail.com.